Title: An Integrated Framework for Bird Recognition using Dynamic Machine Learning-based Classification

Publisher: IEEE 28th ISCC

- Authors: Wiam Rabhi, Fatima Eljaimi, Walid Amara, Zakaria Charouh, Amal Ezzouhri, Houssam Benaboud, Moudathirou Ben Saindou, Fatima Ouardi

Abstract: Bird recognition in computer vision poses two main challenges: high intra-class variance and low inter-class variance. High intra-class variance refers to the significant variation in the appearance of individual birds within the same species. Low inter-class variance refers to the limited visual differences between distinct bird species. In this paper, we propose a robust integrated framework for bird recognition using a dynamic machine learning-based technique. Our system is designed to identify over 11,000 species of birds based on multiple components. As part of this work, we propose two public datasets. The first one (E-Moulouya BDD) contains over 13k images of birds for detection tasks. While the second one (LaSBiRD) contains about 5M labelled images of 11k species. Our experiments yielded promising results, indicating the impressive performance of our system in detecting and classifying birds. With a mAP of 0.715 for detection and an accuracy rate of 96% for classification.

DOI:10.1109/ISCC58397.2023.10218182- Graphical Abstract:

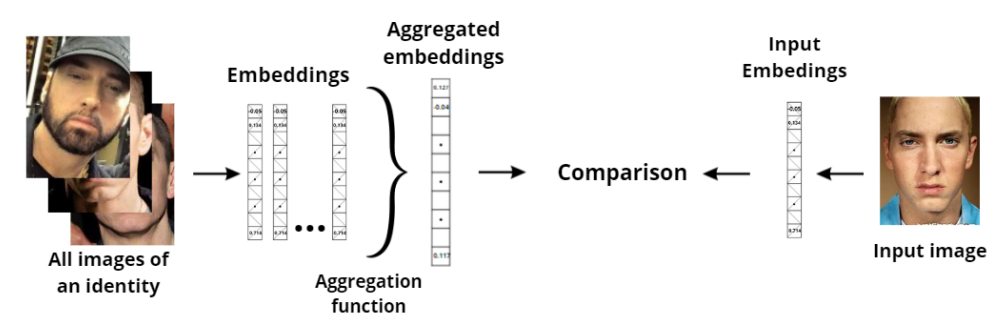

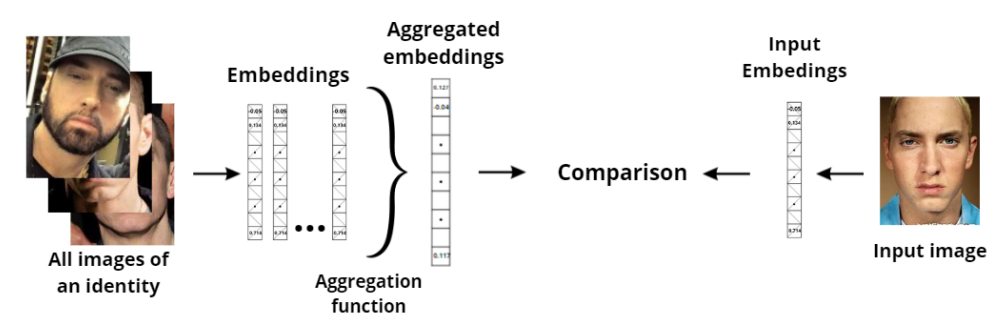

Title: Aggregating Multiple Embeddings: A Novel Approach to Enhance Reliability and Reduce Complexity in Facial Recognition

Publisher: IEEE 28th ISCC

- Authors: Houssam Benaboud, Walid Amara, Amal Ezzouhri, Fatima Eljaimi, Wiam Rabhi, Zakaria Charouh

Abstract: Facial recognition is widely used, but the reliability of the embeddings extracted by most computer vision-based approaches is a challenge due to the high similarity among human faces and the effect of facial expressions and lighting. Our proposed approach aggregates multiple embeddings to generate a more robust reference for facial embedding comparison and explores the distances metrics to use in order to optimize the comparison efficiency while preserving complexity. We also apply our method to the state-of-the-art algorithm that extracts embeddings from faces in an image. The proposed approach was compared with several approaches. It optimizes the Resnet accuracy to 99.77%, Facenet to 99.79%, and Inception-ResnetV1 to 99.16%. Our approach preserves the inference time of the model while increasing its reliability since the number of comparisons is kept at a minimum. Our results demonstrate that our proposed approach offers an effective solution for addressing facial recognition in real-world environments.

DOI:10.1109/ISCC58397.2023.10218214- Graphical Abstract:

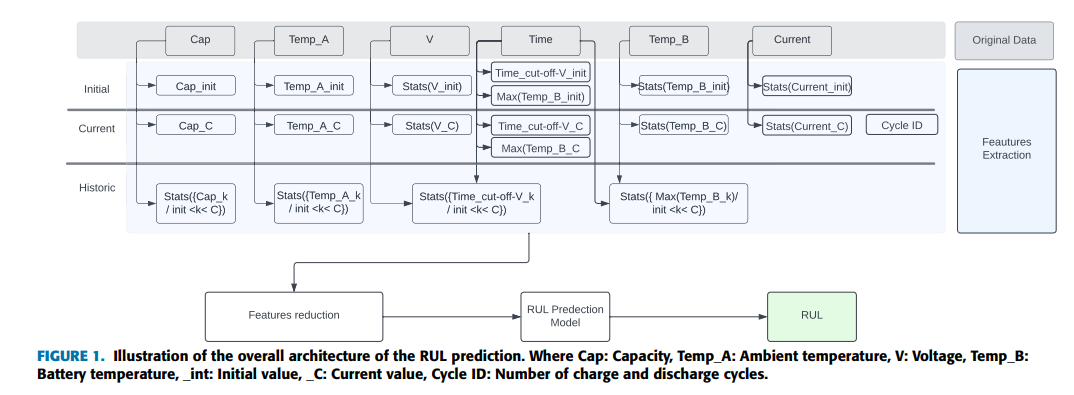

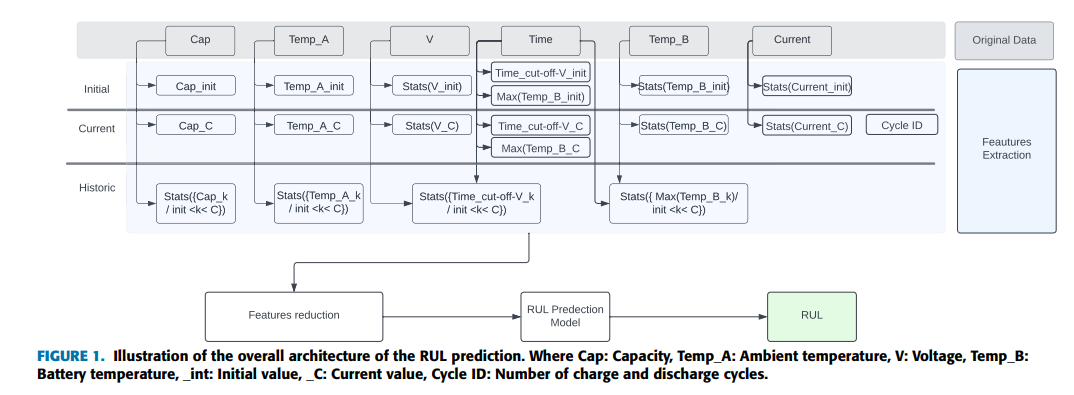

Title: A Data-Driven-Based Framework for Battery Remaining Useful Life Prediction

Publisher: IEEE Access

- Authors: Amal Ezzouhri, Zakaria Charouh, Mounir Ghogho, Zouhair Guennoun

Abstract: Electric vehicles are expected to dominate the vehicle fleet in the near future due to their zero emissions of pollutants, reduced fossil fuel reserves, comfort, and lightness. However, Battery Electric Vehicles (BEVs) suffer from gradual performance degradation caused by irreversible chemical and physical changes inside their batteries. Moreover, predicting the health and remaining useful life of BEVs is difficult due to various internal and external factors. In this paper, we propose an integrated data-driven framework for accurately predicting the Remaining Useful Life (RUL) of lithium-ion batteries used in BEVs. As part of this work, we conducted a comprehensive analysis and comparison of publicly available battery datasets, providing an up-to-date list of data sources for the community. Our framework relies on a novel feature extraction strategy that accurately characterizes the battery, leading to improved RUL predictions. Feature types can be divided into three groups: initial state features, current state features, and historic state features. Experimental results indicate that the proposed method performs very well on the NASA benchmark dataset with an average accuracy of 97% on batteries that are not used during the training phase, demonstrating the ability of our framework to operate with batteries being discharged in any conditions.

DOI:10.1109/ACCESS.2023.3286307- Graphical Abstract:

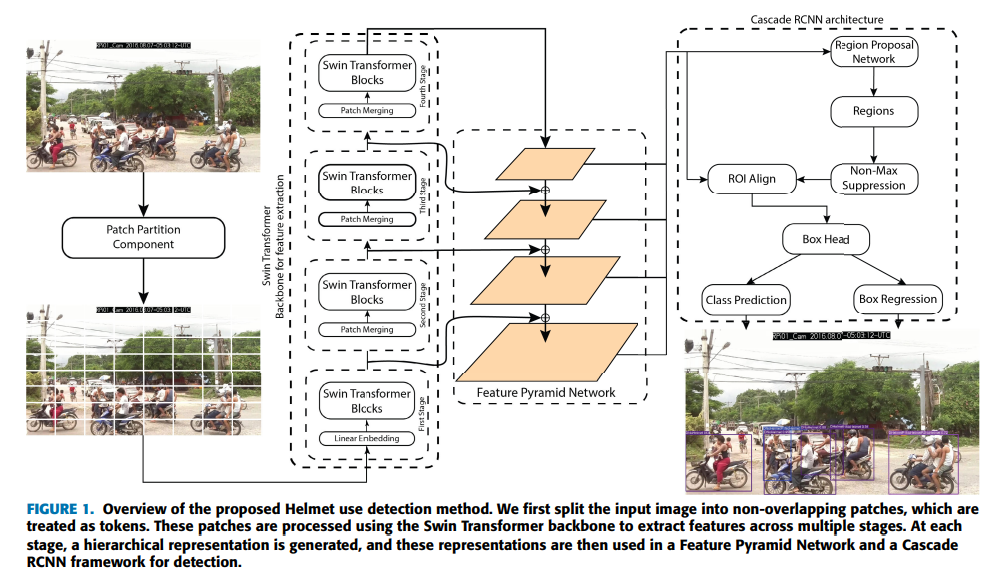

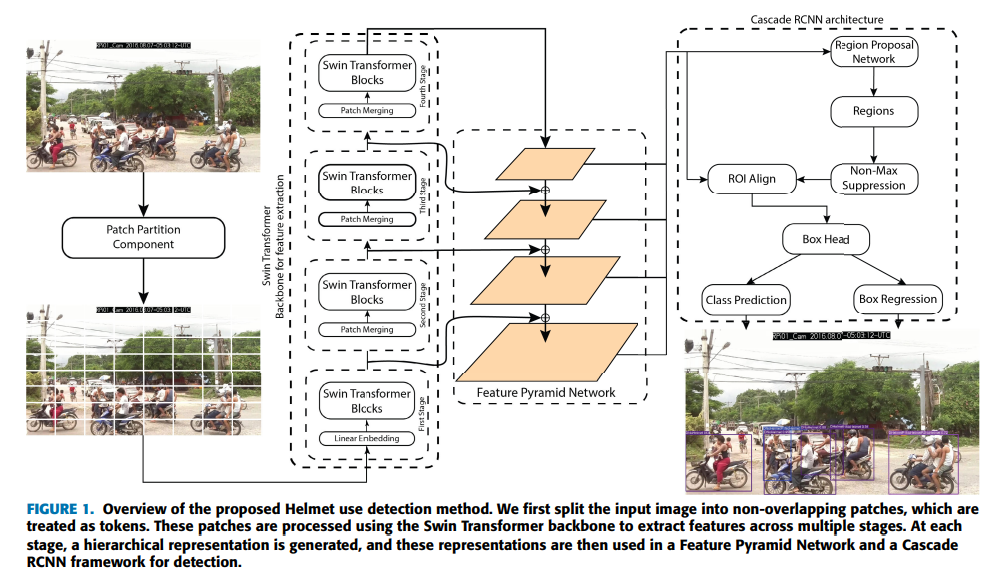

Title: A Swin Transformer-Based Approach for Motorcycle Helmet Detection

Publisher: IEEE Access

- Authors: Ayyoub Bouhayane, Zakaria Charouh, Mounir Ghogho, Zouhair Guennoun

Abstract: Video surveillance-based automated detection of helmet use among motorcyclists has the potential to improve road safety by aiding in the implementation of enforcement initiatives. Despite that, the current detection approaches have many limitations. For instance, they are unable to detect multiple passengers or to function effectively in complex conditions. In this paper, we address the challenging problem of automated monitoring of helmet use using computer vision and machine learning. We propose a method based on deep neural network models known as transformers. We apply the base version of the Swin transformer as a backbone for feature extraction, and then combine a Feature Pyramid Network (FPN) neck with the Cascade Region-based Convolutional Neural Networks (RCNN) framework for final detection. The effectiveness of our proposed method is demonstrated through extensive experiments and is compared to existing approaches. Our method achieves a mean Average Precision (mAP) of 30.4, thus outperforming state-of-the-art detection methods.

DOI:10.1109/ACCESS.2023.3296309- Graphical Abstract:

Title: LASBIRD: LArge Scale BIrd Recognition Dataset

Publisher: IEEE Dataport

- Authors: Fatima EL JAIMI, Wiam RABHI, Walid AMARA, Zakaria CHAROUH, Houssam BENABOUD, Moudathirou BEN SAINDOU

Abstract: The dataset consists of a large collection of images of about 11,000 different species of birds, with a total of 5 million images. This dataset represents a valuable resource for researchers, conservationists, and bird enthusiasts alike, allowing for a more comprehensive understanding of the diversity and distribution of avian species around the world. The data could be used for a wide range of applications, including species identification, biodiversity monitoring, and ecological research. The sheer size of the dataset makes it a powerful tool for machine learning and computer vision algorithms, enabling the development of accurate and efficient automated bird identification systems. Overall, this dataset represents an unprecedented opportunity to advance our knowledge of birds and their role in the natural world.

DOI:10.21227/s3xd-2s66

Title: E-MOULOUYA BDD: Extended Moulouya Bird Detection Dataset

Publisher: IEEE Dataport

- Authors: Wiam RABHI, Fatima EL JAIMI, Walid AMARA, Zakaria CHAROUH, Houssam BENABOUD, Moudathirou BEN SAINDOU

Abstract:The Extended Moulouya Bird Detection Dataset (E-Moulouya BDD) is a comprehensive collection of annotated images proposed for bird detection. The dataset is a combination of three datasets, namely the XMBA dataset, the Rest Birds Dataset, and the D-Birds Dataset, which were merged and cleaned up to provide a consistent and unified labeling format. The E-Moulouya BDD comprises 13,000 annotated images, labeled with a standardized format to ensure consistency across the dataset. The dataset is publicly available on IEEE DATAPORT, making it an ideal resource for use by the scientific community. The E-Moulouya BDD is a valuable addition to the existing bird detection datasets, and its availability is expected to facilitate and improve research in this field.

DOI:10.21227/es8y-cr94

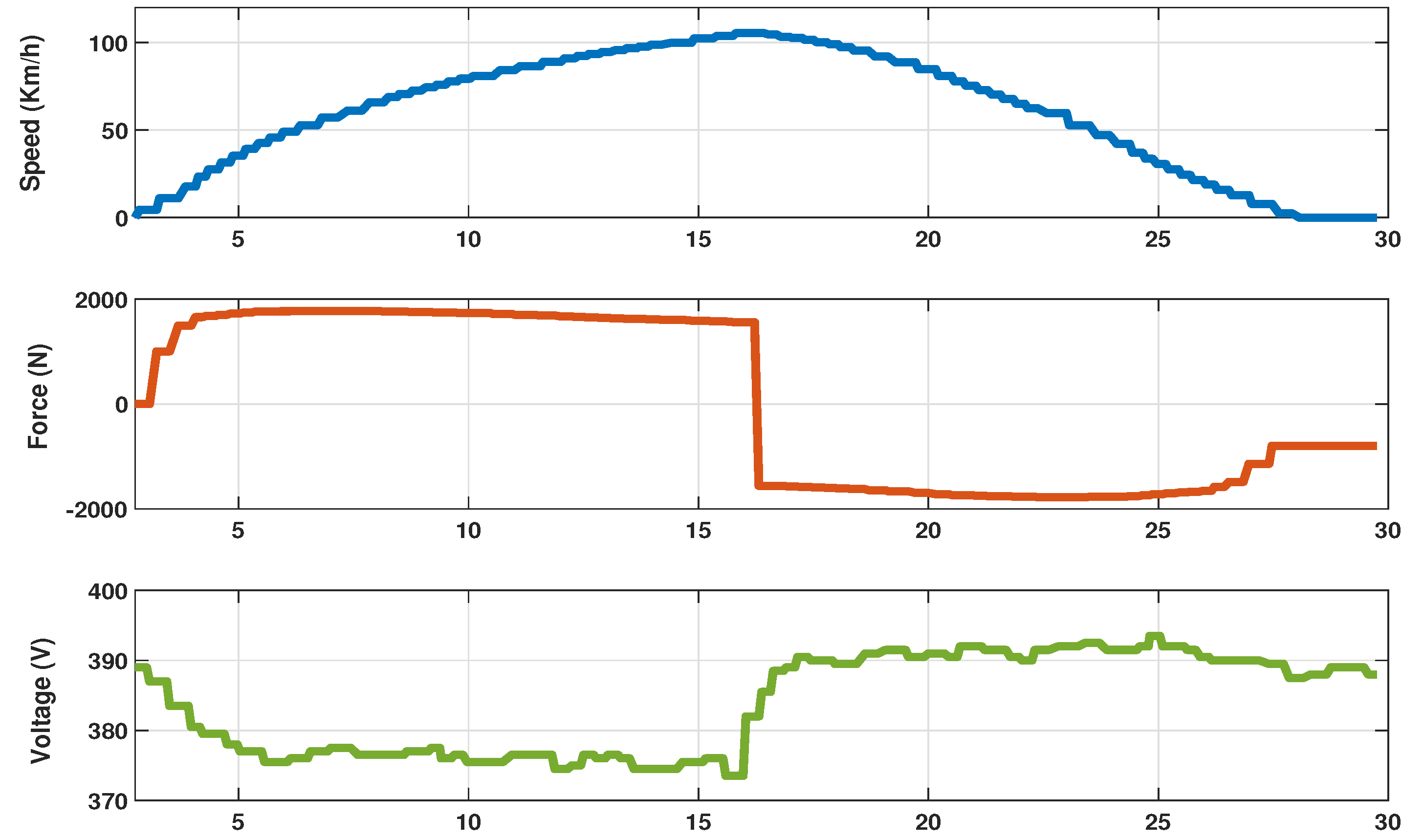

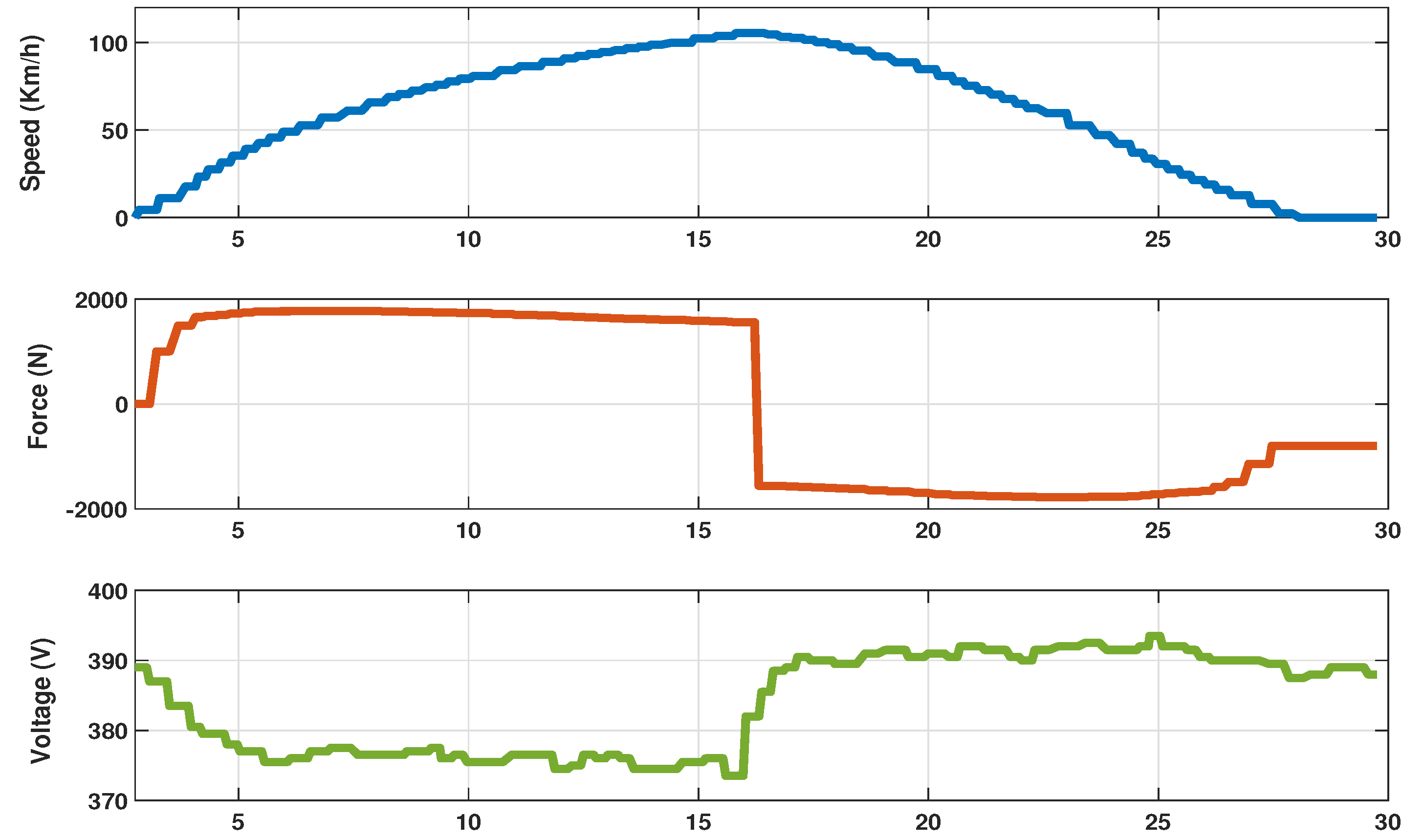

Title: Energy Consumption Prediction and Analysis for Electric Vehicles: A hybrid Approach

Publisher: Energies

- Authors: Hamza Mediouni, Amal Ezzouhri, Zakaria Charouh, Khadija El Harouri, Soumia El Hani, Mounir Ghogho

Abstract: Range anxiety remains one of the main hurdles to the widespread adoption of electric vehicles (EVs). To mitigate this issue, accurate energy consumption prediction is required. In this study, a hybrid approach is proposed toward this objective by taking into account driving behavior, road conditions, natural environment, and additional weight. The main components of the EV were simulated using physical and equation-based models. A rich synthetic dataset illustrating different driving scenarios was then constructed. Real-world data were also collected using a city car. A machine learning model was built to relate the mechanical power to the electric power. The proposed predictive method achieved an R2 of 0.99 on test synthetic data and an R2 of 0.98 on real-world data. Furthermore, the instantaneous regenerative braking power efficiency as a function of the deceleration level was also investigated in this study.

DOI:10.3390/en15176490- Graphical Abstract:

Title: Video Analysis and Rule-Based Reasoning for Driving Maneuver Classification at Intersections

Publisher: IEEE Access

- Authors: Zakaria Charouh, Amal Ezzouhri, Mounir Ghogho, Zouhair Guennoun

Abstract: We propose a system for monitoring the driving maneuver at road intersections using rule-based reasoning and deep learning-based computer vision techniques. Along with detecting and classifying turning movements online, the system also detects violations such as ignoring STOP signs and failing to yield the right-of-way to other drivers. There is no distinction between temporarily and permanently stopped vehicles in the majority of frameworks proposed in the literature. Therefore, to conduct an accurate right-of-way study, permanently stopped vehicles should be excluded not to confound the results. Moreover, we also propose in this work a low-cost Convolutional Neural Network (CNN)-based object detection framework able to detect moving and temporally stopped vehicles. The detection framework combines the reasoning system with background subtraction and a CNN-based object detector. The obtained results are promising. Compared to the conventional CNN-based methods, the detection framework reduces the execution time of the object detection module by about 30% (i.e., 54.1 instead of 75ms/image) while preserving the same detection reliability. The accuracy of trajectory recognition is 95.32%, that of the zero-speed detection is 96.67%, and the right-of-way detection was perfect.

DOI:10.1109/ACCESS.2022.3169140- Graphical Abstract:

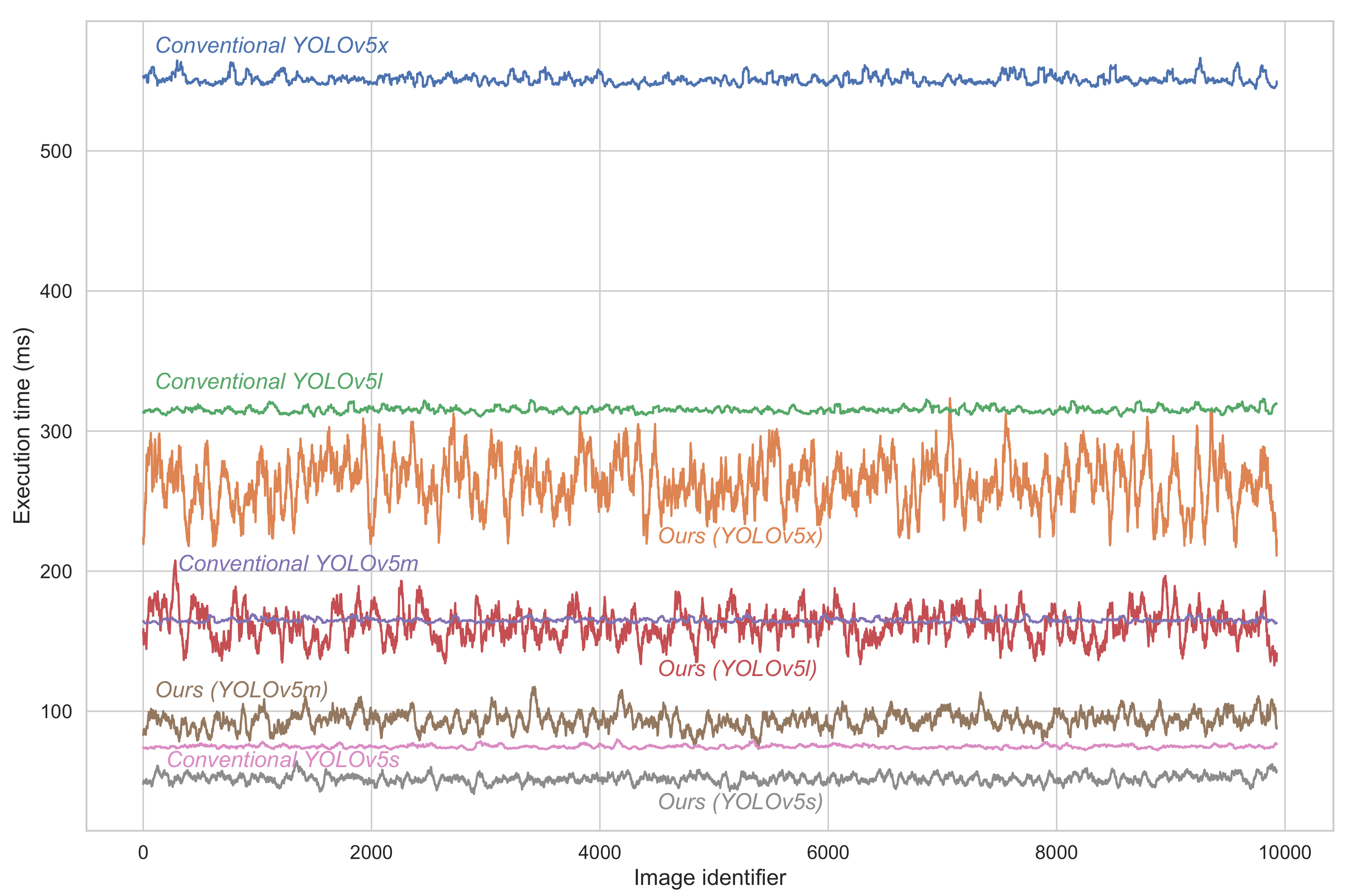

Title: A Resource-Efficient CNN-Based Method for Moving Vehicle Detection

Publisher: Sensors

- Authors: Zakaria Charouh, Amal Ezzouhri, Mounir Ghogho, Zouhair Guennoun

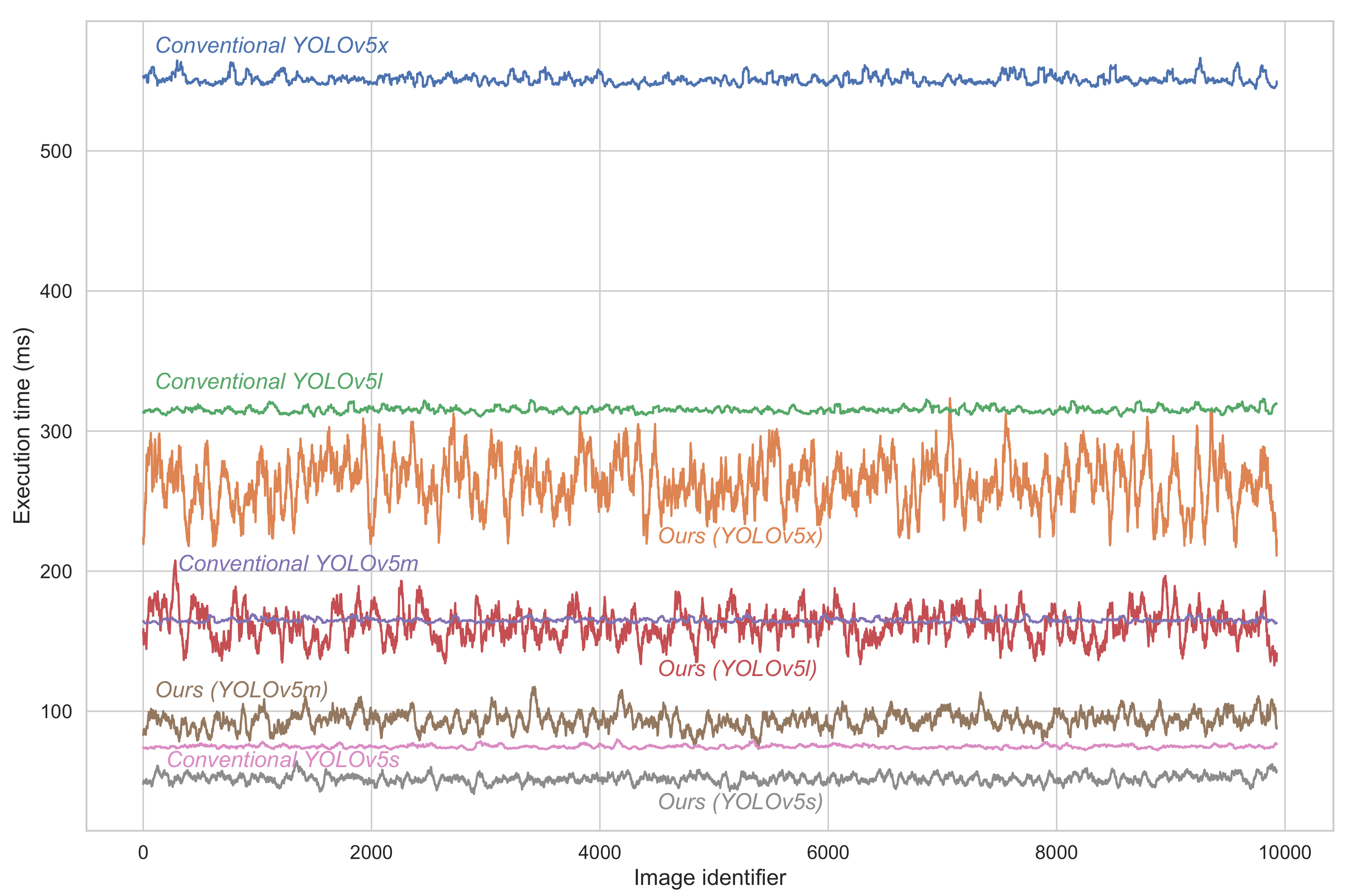

Abstract: There has been significant interest in using Convolutional Neural Networks (CNN) based methods for Automated Vehicular Surveillance (AVS) systems. Although these methods provide high accuracy, they are computationally expensive. On the other hand, Background Subtraction (BS)-based approaches are lightweight but provide insufficient information for tasks such as monitoring driving behavior and detecting traffic rules violations. In this paper, we propose a framework to reduce the complexity of CNN-based AVS methods, where a BS-based module is introduced as a preprocessing step to optimize the number of convolution operations executed by the CNN module. The BS-based module generates image-candidates containing only moving objects. A CNN-based detector with the appropriate number of convolutions is then applied to each image-candidate to handle the overlapping problem and improve detection performance. Four state-of-the-art CNN-based detection architectures were benchmarked as base models of the detection cores to evaluate the proposed framework. The experiments were conducted using a large-scale dataset. The computational complexity reduction of the proposed framework increases with the complexity of the considered CNN model’s architecture (e.g., 30.6% for YOLOv5s with 7.3M parameters; 52.2% for YOLOv5x with 87.7M parameters), without undermining accuracy.

DOI:10.3390/s22031193- Graphical Abstract:

Title: Robust deep learning-based driver distraction detection and classification

Publisher: IEEE Access

- Authors: Amal Ezzouhri, Zakaria Charouh, Mounir Ghogho, Zouhair Guennoun

Abstract: Driver distraction is a major cause of road accidents. Distracting activities while driving include text messaging and talking on the phone. In this paper, we propose a robust driver distraction detection system that extracts the driver’s state from the recordings of an onboard camera using Deep Learning. We consider ten driving activities, which consist of one normal driving and nine distracted driving behaviors. Nine drivers were included in the experiments, and each one was asked to perform the ten activities in naturalistic and simulated driving situations. The main feature of the proposed solution is the extraction of the driver’s body parts, using deep learning-based segmentation, before performing the distraction detection and classification task. Experimental results show that the segmentation module significantly improves the classification performance. The average accuracy of the proposed solution exceeds 96% on our dataset and 95% on the public AUC dataset.

DOI:10.1109/ACCESS.2021.3133797- Graphical Abstract:

Title: EV ENERGY CONSUMPTION AND SPEED PROFILES DATASET (EVECS)

Publisher: IEEE Dataport

- Authors: Amal Ezzouhri, Zakaria Charouh, Hamza Mediouni, Mounir Ghogho, Zouhair Guennoun

Abstract: Two electric vehicles were used in this study, namely the Renault Zoe Q210 2016 and the Renault Kangoo ZE 2018. The EVs were equipped with data loggers connected to the CAN bus recording data on the HV battery current, voltage, SoC, and instantaneous speeds. We also used a GPS logger mobile application to record GPS tracks and altitudes. Data were collected from six drivers (four men and two women) with varying levels of driving experience (from less than two months to more than 10 years) on a variety of roads and driving conditions for nearly 200 kilometers.

DOI:10.21227/t4e1-w222

Title: Howdrive 3D : Driver Distraction Dataset

Publisher: IEEE Dataport

- Authors: Amal Ezzouhri, Zakaria Charouh, Mounir Ghogho, Zouhair Guennoun

Abstract: To study the driver's behavior in real traffic situations, we conducted experiments using an instrumented vehicle, which comprises: (i) a camera, installed above the vehicle's side window and oriented toward the driver, and (ii) a Mobile Digital Video Recorder (MDVR). One part of the data was collected in real-world driving conditions. The other part was collected by asking drivers to simulate different types of driving behaviors in the instrumented vehicle, but without moving the vehicle for safety reasons. Nine drivers were involved in the experiment. Each of them was asked to perform the ten activities separately (i.e., one activity for each video sequence) while driving or pretending to drive, which took about 15 minutes for each driver resulting in about 450 images per class per driver. After the manual examination, a total of about 38 thousand images were preserved.

DOI:10.21227/f9z3-0438

Title: Headway and Following Distance Estimation using a Monocular Camera and Deep Learning

Publisher: 13th ICAART

- Authors: Zakaria Charouh, Amal Ezzouhri, Mounir Ghogho, Zouhair Guennoun

Abstract: We propose a system for monitoring the headway and following distance using a roadside camera and deep learning-based computer vision techniques. The system is composed of a vehicle detector and tracker, a speed estimator and a headway estimator. Both motion-based and appearance-based methods for vehicle detection are investigated. Appearance-based methods using convolutional neural networks are found to be most appropriate given the high detection accuracy requirements of the system. Headway estimation is then carried out using the detected vehicles on a video sequence. The following distance estimation is carried out using the headway and speed estimations. We also propose methods to assess the performance of the headway and speed estimation processes. The proposed monitoring system has been applied to data that we have collected using a roadside camera. The root mean square error of the headway estimation is found to be around 0.045 seconds.

DOI:10.5220/0010253308450850

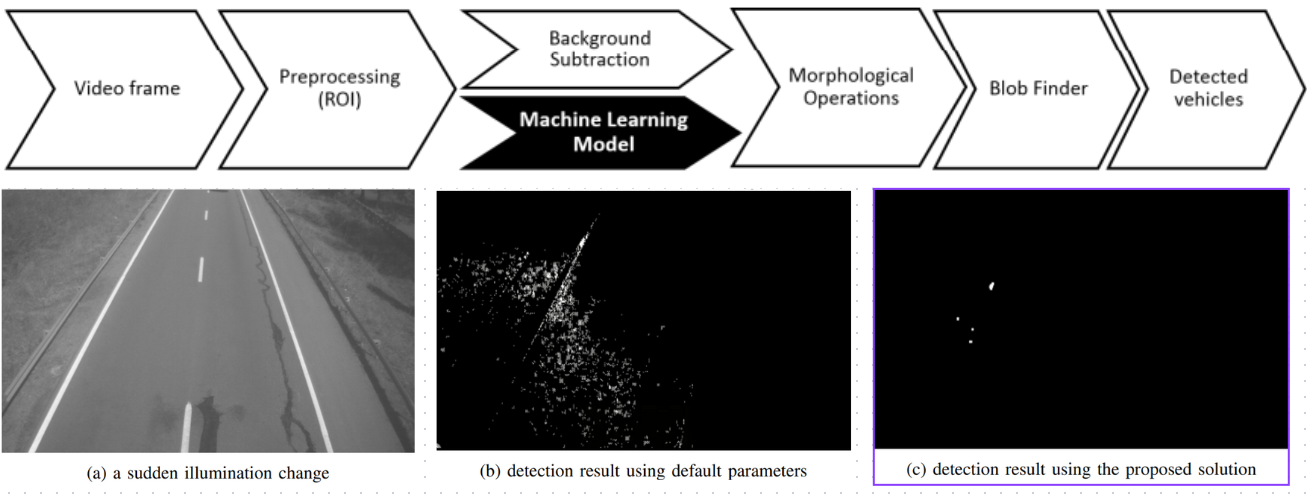

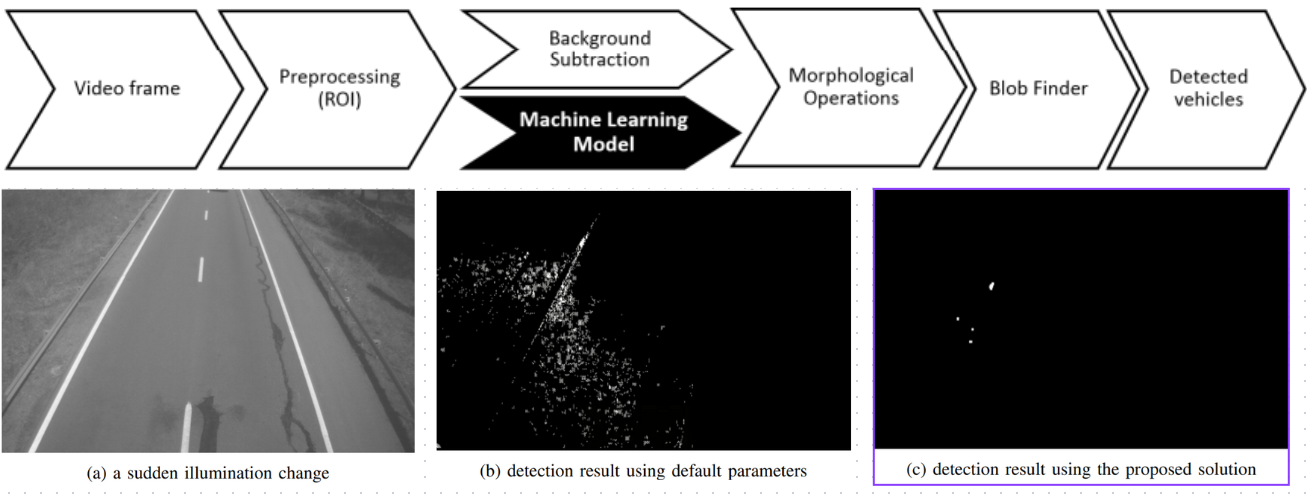

Title: Improved Background Subtraction-Based Moving Vehicle Detection by Optimizing Morphological Operations using Machine Learning

Publisher: IEEE 13th INISTA

- Authors: Zakaria Charouh, Mounir Ghogho, Zouhair Guennoun

Abstract: Object detection represents the most important component of Automated Vehicular Surveillance (AVS) systems. Moving vehicle detection based on background subtraction, with fixed morphological parameters, is a popular approach in AVS systems. However, the performance of such an approach deteriorates in the presence of sudden illumination changes in the scene. To address this issue, this paper proposes a method to adjust in real-time the morphological parameters to the illumination changes in the scene. The method is based on machine learning. The features used in the machine learning models are first, second, third and fourth-order statistics of the grayscale images, and the outputs are the appropriate morphological parameters. The resulting background subtraction-based object detection is shown to be robust to illumination changes, and to significantly outperform the conventional approach. Further, artificial neural network (ANN) is shown to provide better performance than Naive Bayes and K-Nearest Neighbours models.

DOI:10.1109/INISTA.2019.8778263- Graphical Abstract:

Title: Application of Wireless Networks and Optical Network to NextGen Aircrafts

Publisher: IEEE RAINS 2016

- Authors: Zakaria Charouh, Habiba Chaoui

Abstract: Nowadays almost every industry is knowing a rapid development and the aviation industry is no exception thus it is crucial to enhance the focus on network security concerning new Aircraft Data Communication Systems, seeing that the current networks, regarding deployments, are mainly wired technology. Based on the above, many aerospace working groups have already begun actualizing the idea of creating an AWN (Avionic Wireless Network). The main purpose behind the idea is to minimize both the power consumption and financial costs, not to mention the difficulty of wiring (weight, design, etc...) which will make possible using mobile devices. An optical network design is also suggested as an alternative for the usual on board networks in the upcoming avionic systems. In the following lines, we will discuss the relevance of these technologies, regarding avionic requirements, in order to select the best and most reliable one(s).

DOI:10.1109/RAINS.2016.7764409